更新ollama

curl -fsSL https://ollama.com/install.sh | sh不是最新的ollama的话会导致接口出问题。

安装graphrag

conda create -n graphrag_3 python=3.10conda activate graphrag_3pip install graphrag初始化根目录

python -m graphrag.index --init --root .最后的.代表是当前目录。

更改配置文件

本人使用的是deepseek大模型和本地ollama的嵌入模型,当然大模型也可以使用本地,但是效果不是很好。而ds的api送得多,效果还可以,就先用着了。

获取ds的API key:DeepSeek

获得的API key写入到根目录(刚刚初始化的)下自动创建的文件夹.env里面去。

GRAPHRAG_API_KEY=sk-aaaaaaaaaaaaaaaaaaa然后更改配置文件settings.yaml

部分更改内容如下:

llm:api_key: ${GRAPHRAG_API_KEY}type: openai_chat # or azure_openai_chatmodel: deepseek-chatmodel_supports_json: true # recommended if this is available for your model.api_base: https://api.deepseek.com/v1max_tokens: 4096concurrent_requests: 100 # the number of parallel inflight requests that may be madetokens_per_minute: 500000 # set a leaky bucket throttlerequests_per_minute: 100 # set a leaky bucket throttletop_p: 0.99# request_timeout: 180.0# api_version: 2024-02-15-preview# organization: <organization_id># deployment_name: <azure_model_deployment_name>max_retries: 3max_retry_wait: 10sleep_on_rate_limit_recommendation: true # whether to sleep when azure suggestsparallelization:stagger: 0.3# num_threads: 50 # the number of threads to use for parallel processingasync_mode: threaded # or asyncioembeddings:## parallelization: override the global parallelization settings for embeddingsasync_mode: threaded # or asynciollm:api_key: ${GRAPHRAG_API_KEY}type: openai_embedding # or azure_openai_embeddingmodel: zailiang/bge-large-zh-v1.5api_base: http://localhost:11434/api# api_base: https://<instance>.openai.azure.com# api_version: 2024-02-15-preview# organization: <organization_id># deployment_name: <azure_model_deployment_name># tokens_per_minute: 150_000 # set a leaky bucket throttle# requests_per_minute: 10_000 # set a leaky bucket throttle# max_retries: 10# max_retry_wait: 10.0# sleep_on_rate_limit_recommendation: true # whether to sleep when azure suggests wait-times# concurrent_requests: 25 # the number of parallel inflight requests that may be made# batch_size: 16 # the number of documents to send in a single request# batch_max_tokens: 8191 # the maximum number of tokens to send in a single request# target: required # or optionalchunks:size: 300overlap: 100group_by_columns: [id] # by default, we don't allow chunks to cross documents构建索引

python -m graphrag.index --root .如果使用的是本地ollama跑的嵌入模型,会出问题。(2024年8月20日)

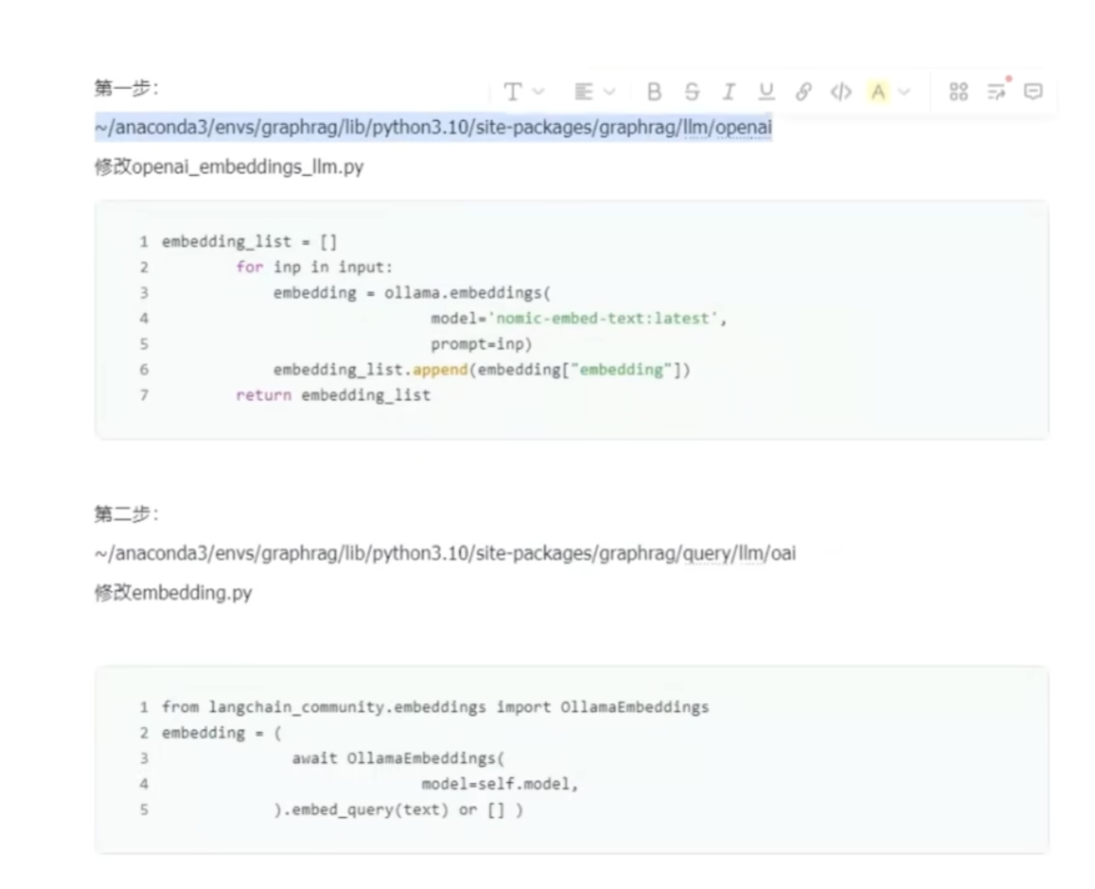

修改源码

使用本地ollama需要修改源码

ollama启动向量模型服务本地部署GraphRAG,从报错到更改,带你定位源码,更改源码_哔哩哔哩_bilibili

cd /root/miniconda3/envs/graphrag_3/lib/python3.10/site-packages/graphrag/llm/openai两个被改的代码:

注意model='zailiang/bge-large-zh-v1.5:latest'要改成自己使用的嵌入模型。

/root/miniconda3/envs/graphrag_3/lib/python3.10/site-packages/graphrag/llm/openai/openai_embeddings_llm.py

# Copyright (c) 2024 Microsoft Corporation.

# Licensed under the MIT License"""The EmbeddingsLLM class."""from typing_extensions import Unpackimport ollamafrom graphrag.llm.base import BaseLLM

from graphrag.llm.types import (EmbeddingInput,EmbeddingOutput,LLMInput,

)from .openai_configuration import OpenAIConfiguration

from .types import OpenAIClientTypesclass OpenAIEmbeddingsLLM(BaseLLM[EmbeddingInput, EmbeddingOutput]):"""A text-embedding generator LLM."""_client: OpenAIClientTypes_configuration: OpenAIConfigurationdef __init__(self, client: OpenAIClientTypes, configuration: OpenAIConfiguration):self.client = clientself.configuration = configurationasync def _execute_llm(self, input: EmbeddingInput, **kwargs: Unpack[LLMInput]) -> EmbeddingOutput | None:args = {"model": self.configuration.model,**(kwargs.get("model_parameters") or {}),}embedding_list = []for inp in input:embedding = ollama.embeddings(model='zailiang/bge-large-zh-v1.5:latest',prompt=inp)embedding_list.append(embedding["embedding"])return embedding_list# embedding = await self.client.embeddings.create(# input=input,# **args,# )# return [d.embedding for d in embedding.data]

/root/miniconda3/envs/graphrag_3/lib/python3.10/site-packages/graphrag/query/llm/oai/embedding.py

# Copyright (c) 2024 Microsoft Corporation.

# Licensed under the MIT License"""OpenAI Embedding model implementation."""import asyncio

from collections.abc import Callable

from typing import Anyimport numpy as np

import tiktoken

from tenacity import (AsyncRetrying,RetryError,Retrying,retry_if_exception_type,stop_after_attempt,wait_exponential_jitter,

)from graphrag.query.llm.base import BaseTextEmbedding

from graphrag.query.llm.oai.base import OpenAILLMImpl

from graphrag.query.llm.oai.typing import (OPENAI_RETRY_ERROR_TYPES,OpenaiApiType,

)

from graphrag.query.llm.text_utils import chunk_text

from graphrag.query.progress import StatusReporter

from langchain_community.embeddings import OllamaEmbeddingsclass OpenAIEmbedding(BaseTextEmbedding, OpenAILLMImpl):"""Wrapper for OpenAI Embedding models."""def __init__(self,api_key: str | None = None,azure_ad_token_provider: Callable | None = None,model: str = "text-embedding-3-small",deployment_name: str | None = None,api_base: str | None = None,api_version: str | None = None,api_type: OpenaiApiType = OpenaiApiType.OpenAI,organization: str | None = None,encoding_name: str = "cl100k_base",max_tokens: int = 8191,max_retries: int = 10,request_timeout: float = 180.0,retry_error_types: tuple[type[BaseException]] = OPENAI_RETRY_ERROR_TYPES, # type: ignorereporter: StatusReporter | None = None,):OpenAILLMImpl.__init__(self=self,api_key=api_key,azure_ad_token_provider=azure_ad_token_provider,deployment_name=deployment_name,api_base=api_base,api_version=api_version,api_type=api_type, # type: ignoreorganization=organization,max_retries=max_retries,request_timeout=request_timeout,reporter=reporter,)self.model = modelself.encoding_name = encoding_nameself.max_tokens = max_tokensself.token_encoder = tiktoken.get_encoding(self.encoding_name)self.retry_error_types = retry_error_typesdef embed(self, text: str, **kwargs: Any) -> list[float]:"""Embed text using OpenAI Embedding's sync function.For text longer than max_tokens, chunk texts into max_tokens, embed each chunk, then combine using weighted average.Please refer to: https://github.com/openai/openai-cookbook/blob/main/examples/Embedding_long_inputs.ipynb"""token_chunks = chunk_text(text=text, token_encoder=self.token_encoder, max_tokens=self.max_tokens)chunk_embeddings = []chunk_lens = []for chunk in token_chunks:try:embedding, chunk_len = self._embed_with_retry(chunk, **kwargs)chunk_embeddings.append(embedding)chunk_lens.append(chunk_len)# TODO: catch a more specific exceptionexcept Exception as e: # noqa BLE001self._reporter.error(message="Error embedding chunk",details={self.__class__.__name__: str(e)},)continuechunk_embeddings = np.average(chunk_embeddings, axis=0, weights=chunk_lens)chunk_embeddings = chunk_embeddings / np.linalg.norm(chunk_embeddings)return chunk_embeddings.tolist()async def aembed(self, text: str, **kwargs: Any) -> list[float]:"""Embed text using OpenAI Embedding's async function.For text longer than max_tokens, chunk texts into max_tokens, embed each chunk, then combine using weighted average."""token_chunks = chunk_text(text=text, token_encoder=self.token_encoder, max_tokens=self.max_tokens)chunk_embeddings = []chunk_lens = []embedding_results = await asyncio.gather(*[self._aembed_with_retry(chunk, **kwargs) for chunk in token_chunks])embedding_results = [result for result in embedding_results if result[0]]chunk_embeddings = [result[0] for result in embedding_results]chunk_lens = [result[1] for result in embedding_results]chunk_embeddings = np.average(chunk_embeddings, axis=0, weights=chunk_lens) # type: ignorechunk_embeddings = chunk_embeddings / np.linalg.norm(chunk_embeddings)return chunk_embeddings.tolist()def _embed_with_retry(self, text: str | tuple, **kwargs: Any) -> tuple[list[float], int]:try:retryer = Retrying(stop=stop_after_attempt(self.max_retries),wait=wait_exponential_jitter(max=10),reraise=True,retry=retry_if_exception_type(self.retry_error_types),)for attempt in retryer:with attempt:embedding = (OllamaEmbeddings(model=self.model,).embed_query(text) or [] )# embedding = (# self.sync_client.embeddings.create( # type: ignore# input=text,# model=self.model,# **kwargs, # type: ignore# )# .data[0]# .embedding# or []# )return (embedding, len(text))except RetryError as e:self._reporter.error(message="Error at embed_with_retry()",details={self.__class__.__name__: str(e)},)return ([], 0)else:# TODO: why not just throw in this case?return ([], 0)async def _aembed_with_retry(self, text: str | tuple, **kwargs: Any) -> tuple[list[float], int]:try:retryer = AsyncRetrying(stop=stop_after_attempt(self.max_retries),wait=wait_exponential_jitter(max=10),reraise=True,retry=retry_if_exception_type(self.retry_error_types),)async for attempt in retryer:with attempt:embedding = (await OllamaEmbeddings(model=self.model,).embed_query(text) or [] )# embedding = (# await self.async_client.embeddings.create( # type: ignore# input=text,# model=self.model,# **kwargs, # type: ignore# )# ).data[0].embedding or []return (embedding, len(text))except RetryError as e:self._reporter.error(message="Error at embed_with_retry()",details={self.__class__.__name__: str(e)},)return ([], 0)else:# TODO: why not just throw in this case?return ([], 0)

修改后的源码需要装包

pip install langchain

pip install ollama

再次构建索引

python -m graphrag.index --root .这次就成功了。

使用graphrag

python -m graphrag.query --root . --method local ""python -m graphrag.query --root . --method global ""尝试优化prompt

python -m graphrag.prompt_tune --config DONFIG --root . --domain "Chinese Financial Services" --language

Chinese --chunk-size 300 --output prompt-bank1

、地图打印(webprinting)等服务)

——日期类和const成员函数)